Glass Box

Glass Box

Glass Box is an approach to AI design that makes the system’s reasoning transparent and auditable. Where black box systems hide their decision-making process, glass box systems reveal each step, allowing humans to understand, verify, and intervene when needed.

This isn’t just about explainability—it’s about maintaining human agency and building trust through transparency. Glass box AI serves as a cognitive partner rather than an opaque oracle.

Core Principles

Visible Reasoning Chains

Every decision the AI makes should be traceable through explicit steps. Like showing your work in mathematics, the system exposes its thought process through:

- Structured scratch pads that capture intermediate reasoning

- Step-by-step breakdowns of complex decisions

- Clear indication of what information influenced each conclusion

Human-in-the-Loop by Design

Glass box systems assume human oversight as a feature, not a bug:

- Pause points for human verification at critical decisions

- Ability to inspect and modify any step in the process

- Clear handoffs between automated and human judgment

- Preservation of human agency at every level

Auditable Outputs

Every output includes not just the result, but the path to get there:

- Source attribution for factual claims

- Confidence levels for different assertions

- Alternative paths considered but not taken

- Assumptions made and constraints applied

Expert Reasoning Mapping

Glass box systems encode expert thought patterns directly into AI scratch pads:

- Captures the actual decision-making process experts use

- Surfaces edge cases and potential issues early in the reasoning chain

- Constrains the LLM to follow proven reasoning paths rather than wandering

- Creates structured tokens that guide the model toward expert-like conclusions

Glass Box vs. Black Box

| Glass Box | Black Box |

|---|---|

| Shows reasoning steps explicitly | Hides internal processing |

| Allows inspection at any point | Provides only final output |

| Human can verify and modify | Human must accept or reject wholesale |

| Builds trust through transparency | Requires blind faith |

| Errors can be traced and fixed | Errors are mysterious |

| Learning happens through observation | No learning opportunity |

Implementation Patterns

Structured Scratch Pads

Instead of letting AI reason invisibly, require it to “think out loud” in structured formats:

STEP 1: Understanding the request

- User wants: [explicit interpretation]

- Key constraints: [identified limitations]

- Success criteria: [measurable outcomes]

STEP 2: Gathering context

- Relevant information: [sources and facts]

- Assumptions made: [explicit statements]

- Confidence level: [high/medium/low with reasoning]

Decision Points Documentation

Make every fork in the road visible:

DECISION: Which approach to take?

- Option A: [description] | Pros: [...] | Cons: [...]

- Option B: [description] | Pros: [...] | Cons: [...]

- SELECTED: Option A

- REASONING: [explicit explanation]

Progressive Disclosure

Start with high-level reasoning, allow drilling down:

- Summary view: Key decision and outcome

- Detailed view: Full reasoning chain

- Debug view: All intermediate steps and alternatives

Examples in Practice

Alt Text Generation

My alt text generation pattern uses glass box principles:

- Shows analysis of page context explicitly

- Documents image classification reasoning

- Explains why specific alt text was chosen

- Allows human to verify each decision step

Code Generation

Instead of generating code mysteriously:

- First shows understanding of requirements

- Outlines approach before implementing

- Comments explain not just what but why

- Flags assumptions for human review

Data Analysis

Rather than producing unexplained insights:

- Shows data cleaning steps

- Documents statistical methods chosen

- Explains outlier handling

- Presents confidence intervals, not just point estimates

Prompt Engineering Translation

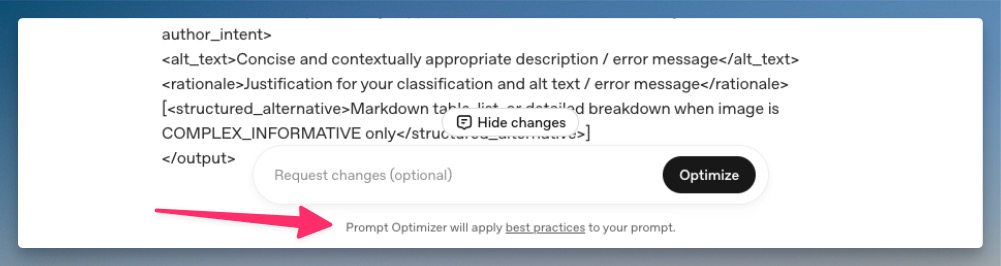

OpenAI’s prompt optimization tool demonstrates glass box principles in AI-assisted prompt engineering:

- Shows explicit analysis of the prompt’s structure and intent

- Documents each improvement with clear reasoning

- Links optimizations to published best practices, making the knowledge base transparent

- Engages in bidirectional dialogue—explaining changes and allowing human refinement

This is glass box AI at its best: I describe what I want in my terms, the system translates to its optimization space while showing every step, then presents the refined prompt with full reasoning. I never lose agency because I can see and approve each translation decision. The tool enhances my prompt engineering capability without requiring me to become a prompt engineering expert. This tool is adapting to where I am, gives me the ability to express my personal judgement and to learn, all while increasing my abilities beyond what I currently know off the top of my head.

When I used this to refine my alt text generation pattern, it preserved my accessibility expertise while adding prompt engineering rigor—a perfect example of ladders enhancing other ladders.

Why Glass Box Matters

Trust Through Transparency

When people can see how decisions are made, they can:

- Trust the system more readily

- Identify when it’s working outside its competence

- Learn from its reasoning patterns

- Correct errors before they compound

Performance Through Structure

The structured scratch pad approach actually improves AI performance:

- Token generation guidance: Creating tokens in the right direction following expert reasoning makes correct determinations more likely

- Early issue detection: Potential problems and edge cases surface during reasoning, not after

- Constrained possibilities: The LLM can’t wander into irrelevant tangents when following structured steps

- Better accuracy: Following expert reasoning paths leads to expert-like conclusions

Future Model Training

Glass box outputs create valuable training data:

- Reasoning corpus: Every interaction generates structured reasoning examples

- Fine-tuning data: Can train smaller, quantized models to perform at similar levels

- Pattern extraction: Identify which reasoning paths lead to best outcomes

- Continuous learning: Each human correction improves the dataset

Regulatory Compliance

Many domains require explainable AI:

- Healthcare decisions must be justifiable

- Financial decisions need audit trails

- Legal applications require clear reasoning

- Accessibility tools must show compliance logic

Human Review Efficiency

Structured reasoning makes review practical:

- Clear intervention points: Humans know exactly where to check and correct

- Faster validation: Structured output is quicker to review than prose

- Precise corrections: Can fix specific reasoning steps without starting over

- Learning opportunity: Reviewers understand why errors occurred

The Connection to Ladders

Glass box AI is essential for building ladders. When AI acts as a ladder helping people climb to new expertise, they need to see each rung—understanding not just what the AI concluded, but how it got there. This transparency transforms AI from a replacement for human judgment into an amplifier of human capability.

Implementing Glass Box Principles

- Design for Inspection: Build interfaces that make reasoning visible by default

- Require Structured Output: Use formats that separate reasoning from conclusions

- Enable Intervention: Create clear points where humans can modify the process

- Document Confidence: Always indicate certainty levels and assumptions

- Preserve Context: Show what information influenced each decision

See Also

- Building Ladders - The philosophy that drives transparent AI design

- Epistemological Translation - Making different types of reasoning visible and translatable

- Alt Text Generation - A practical implementation of glass box principles